This article explains how to handle the azure cognitive service APIs within Microsoft Flow(power automate) and use the flow from PowerApps. Microsoft Flow team has released new connectors for Azure cognitive service API which are in preview now. It includes Computer Vision and Face API, still I would go with http connector which is tested one.

Each connector has a different set of actions. We can use those actions by passing the proper input to the connections.

Requirements

- Face API URL & Key

- On-Premises Data Gateway – SQL Server

- Microsoft Flow – Free subscription or O365 subscription

Creating Face API\

To create a Face API, you need an Azure Subscription. If you don’t have a subscription, then you can get a free Azure subscription from here.

Visit portal.azure.com and click “Create a Resource”.

Under new, choose “Ai + Machine Learning” -> Face

Create a new face resource by providing the required details.

Once the resource is created, you need to get the key and URL (EndPoint).

Note down the endpoint and key and we will use it on Microsoft Flow.

PowerApps

Sign in to your PowerApps account and click Canvas App from Blank. Choose the Phone from Factor option and provide a name to your app and then click create

Once you created the app, Click Media under insert tool bar and then Insert the Camera on the screen to take the picture.

You will be able to see the number zero in camera property function bar. Which shows only rear camera.

So in order to use the front camera we need to create a toggle button. Insert toggle button from controls and in OnChange property paste this code UpdateContext({EnableFront:!EnableFront})

Now Click the camera and change its camera property to If(EnableFront=true,1,0) and change the camera OnSelect property to ClearCollect(capturedimage,Camera1.Photo)

We have created the camera and now we need insert the image from media tab so that we can see our captured image. Once image has been inserted change its OnSelect property to First(capturedimage).Url.

Now we need to create a flow so click action tab and click flows you will get a window which will show create a new flow. Click that. A new page will be opened and you can see the flow. Click next step and create SharePoint file to store our input image. you need to add the Site Address, Folder Path, File Name. Moreover, you need to send the actual image file from Power Apps with the name of Create_FileContent

In the next step, we need to pass it to the Compose component for the aim of store it in a variable ands also pass it to the HTTP component.

Here in the ComposeComponent, we have to convert the picture to the binary format using the function dataUriToBinary(triggerBody()[‘Createfile_FileContent’]) to do that, first click on the input then in the Expression search for the function dataUriToBinary then for the input choose the Createfile_FileContent.

In next step, we are going to pass the binary file (picture) to a component named HTTP. This component is responsible for calling any API by passing the Url, Key and the requested fields. Choose a new action, and search for the http and select it

In http component, choose the Post for the Method, Url”https://westcentralus.api.cognitive.microsoft.com/face/v1.0/detect

for headers:

Ocp-Apim-Subscription-Key: put the API key from Azure

Content-Type: application/octet-stream

then we need to provide the Queries:

the first attribute is

returnFaceAttribute: that need to return below components from a picture:

age,gender,emotion,smile,hair,makeup,accessories,occlusion,exposure,noise

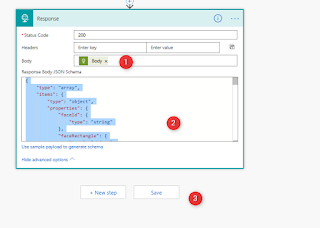

Now we need another component to be able to pass the result to Power Apps, as a result. In the new action search for the response, then for status code select 200, for Body choose the Body, and for the Response Body JSON Schema paste the below codes

Code: {

Now we just need to save the Flow. The flow is created, now we need to connect it to the Power Apps. Go back to PowerApps and Add the button by clicking the Insert-> Button and connect the created flow by clicking Action -> Flows and select the created flow and paste the below code and ClearCollect(facedata,yourflowname.Run(First(capturedimage).Url)). Enter your flow name in the mentioned place.Next step is to create a gallery by clicking Insert -> Gallery -> Blank verticalIt adds a big on to the screen, resize it to be fit for the image cover. There are two things we need to do: first, add the data source to it. we want the gallery able to detect the faces, so we need to add the result of face recognition to the gallery. Under properties you can see items which has drop down in that select facedata and we linked the data.Now we need to insert the rectangle for face detection. Rectangle can be inserted by Insert -> icons -> rectangle. Now, need to change the properties of it and to make it as an unfilled rectangle. click on the rectangle then change the more bored size to be bigger. you also able to change the color and so forth, However, the rectangle still is not dynamic, it always located in the top of the window, and if you run the code by clicking on the top of the page still you not able to see the rectangle. To set a rectangle around the face of the people in the image we need to align it by setting the parametersfirst the OnSelect: as you can see in the picture for Onselect attribute the formula is Select(Parent)the next parameter need to set is about the location of the rectangleclick on the Height Parameter then write the below codesThisItem.faceRectangle.height*(Image1.Height/Image1.OriginalHeight)do the same for widthThisItem.faceRectangle.width*(Image1.Width/Image1.OriginalWidth)or you can put for XThisItem.faceRectangle.left*(Image1.Width/Image1.OriginalWidth)and for the Y valueThisItem.faceRectangle.top*(Image1.Height/Image1.OriginalHeight)To create a interactive app change the image Height, Width, X, Y to Camera1.Height, Camera1.Width, Camera1.X, Camera1.Y respectively and mode the Image1 to top.Now we are going to put some label into the page to show the age, gender, expression and so forth.to show this information insert a new lable to the page.“Age:”& Gallery1.Selected.faceAttributes.agethen now add other attributes like gender, Happiness, Neutral and so forth.like happiness“Happiness:”& Gallery1.Selected.faceAttributes.emotion.happiness“Gender:”& Gallery1.Selected.faceAttributes.genderTo show the hair color we can create a data table by clicking on Insert-> Data Table in the item attribute of Data Table, write the below codeGallery1.Selected.faceAttributes.hair.hairColorTo delete the photo Insert -> icons -> Trash and change the OnSelect to UpdateContext({conShowPhoto:false})Now click the preview button and take photo and check the app and delete the photo and play again.

No comments:

Post a Comment